In 2020

The purpose of this project

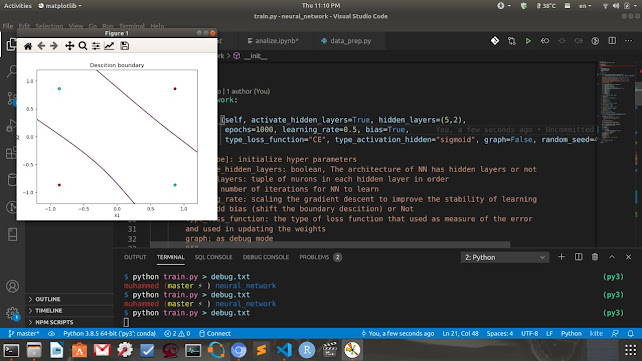

is to implement the magical NN from scratch by applying some math like linear algebra, calculus, and probabilities. And understanding the hyperparameters and implementing various techniques to improve the learning process.

Inventory:

1_Customize n hidden layers as you want to make it deep

2_Customize the number of neurons in each hidden layer

3_Flexibility Adding bias or not for all layers

4_Early stopping algorithm

5_Flexibility adding drop out for the layers

6_regularization L1 / regularization L2

7_Flavors gradient descent:

Batch gradient descent

Stochastic gradient descent

Mini batch gradient descent

8_Implement common activation function: Sigmoid / Softmax / Tanh / Relu / Elu

9_Add Momentum

https://lnkd.in/dyfJDMB

Have fun ^_^

is to implement the magical NN from scratch by applying some math like linear algebra, calculus, and probabilities. And understanding the hyperparameters and implementing various techniques to improve the learning process.

Inventory:

1_Customize n hidden layers as you want to make it deep

2_Customize the number of neurons in each hidden layer

3_Flexibility Adding bias or not for all layers

4_Early stopping algorithm

5_Flexibility adding drop out for the layers

6_regularization L1 / regularization L2

7_Flavors gradient descent:

Batch gradient descent

Stochastic gradient descent

Mini batch gradient descent

8_Implement common activation function: Sigmoid / Softmax / Tanh / Relu / Elu

9_Add Momentum

https://lnkd.in/dyfJDMB

Have fun ^_^

0 comments:

Post a Comment